How We Built a High Performance Document Store on RocksDB?

This blog post was co-authored by Mikhail Bautin and Kannan Muthukkaruppan

RocksDB is a popular embeddable persistent key-value store. First open sourced by Facebook in 2012 as a fork of the Google LevelDB project, it has been adapted over the years to a wide range of workloads including database storage engines and application data caching.

In this post, we explain our rationale for selecting RocksDB as a foundational building block for YugabyteDB. We also highlight how we model rows in YugabyteDB as documents that then get stored as multiple key-value pairs in RocksDB.

Why Does a Database Need Another Database?

You may be wondering why a database like YugabyteDB depends on another database like RocksDB. While both have the letters “DB” in their name, they serve significantly different needs.

RocksDB is a monolithic key-value storage engine with somewhat lower-level APIs. For example, key/values are just byte arrays and not typed. It’s designed for fast access, persistence and embedding in the context of a single node. Given the single node focus, it does not consider high availability and geographic data distribution as design goals.

On the other hand, YugabyteDB is a distributed SQL database for building internet-scale, globally-distributed applications with features such as multi-shard (aka distributed) transactions that access keys on different nodes. These nodes can be in a single datacenter/region or can be far apart in different regions (with unpredictable WAN latencies between the regions). YugabyteDB supports YSQL, a fully relational SQL API for scale-out RDBMS workloads, as well as YCQL, a SQL-based flexible schema API for internet-scale workloads.

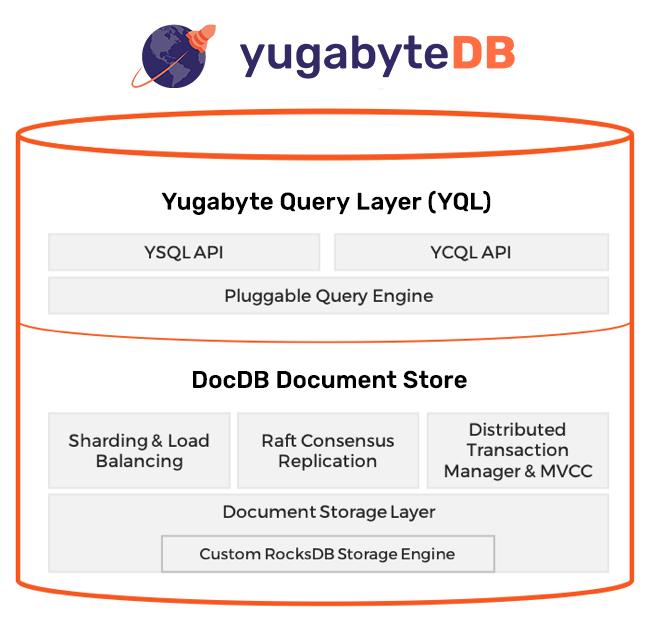

A distributed SQL database is a complex integration of software comprised of several components:

- API layer responsible for language-specific query compilation, execution & optimization

- Replication & transaction coordination

- Data sharding & load balancing

- Failure detection & recovery components

- Last but not least, a per-node (or node-local) storage engine

Specifically, YugabyteDB is comprised of two high level layers – Yugabyte Query Layer (YQL) is the API layer and DocDB is the distributed document store.

YugabyteDB Logical Architecture

YQL implements the APIs supported by YugabyteDB and runs on DocDB, the datastore common across all YQL APIs.

Irrespective of whether a database is distributed or monolithic, a well-designed per-node storage engine is the critical to reliability, efficiency, and performance of the database. This is the precise reason why DocDB uses a highly customized version of RocksDB as its per-node storage engine. As highlighted in our next post “Extending RocksDB for Speed & Scale”, this engine is fully optimized for high performance and large datasets. All changes made to RocksDB are distributed as part of YugabyteDB, an open source Apache 2.0 project.

Why RocksDB?

We were data infrastructure engineers at Facebook during the years when RocksDB was under initial development. Watching RocksDB grow right in front of us into an ideal datastore for low latency and high throughput storage (such as flash SSDs) was exciting to say the least.

When we started the YugabyteDB project in early 2016, RocksDB was already a mature project thanks to its wide adoption across Facebook and other web-scale companies including Yahoo and LinkedIn. This gave us the confidence to use it as a starting point for YugabyteDB.

The other two other key factors in our decision to use RocksDB were its Log Structured Merge tree (LSM) design and our desire to implement YugabyteDB in C++.

LSM vs. B-Tree: Is There a Clear Winner?

LSM storage engines like RocksDB have become the de facto standard today for handling workloads with fast-growing data especially as SSDs keep getting cheaper.

Google’s OLTP databases, namely Spanner and its precursor BigTable, use an LSM store. Facebook’s user databases migrated away from using MySQL with an InnoDB (B-Tree) engine to MySQL with RocksDB-based MyRocks (LSM) engine. MongoDB’s WiredTiger storage engine offers an LSM option as well even though MongoDB itself does not support that option. Newer time series data stores such as InfluxDB also follow the LSM design.

As previously described in “A Busy Developer’s Guide to Database Storage Engines”, there are two primary reasons behind LSM’s overwhelming success over B-Trees.

The first reason is that writes in an LSM engine are sequential, and SSDs handle sequential writes much better than random writes. As explained in this Seagate article, sequential writes dramatically simplifies garbage collection at the SSD layer while random writes (as in a B-Tree engine) causes SSD fragmentation. The defragmentation process impacts SSD performance significantly.

Secondly, even though the conventional wisdom is that B-Trees are better for read intensive-workloads, the reality is that this wisdom is inaccurate for many modern workloads.

- LSMs require more read IOPS from the drives in general. However, unlike HDDs, SSDs offer very high read throughput at microsecond latencies for this to be a major issue with modern hardware.

- For random read workloads, bloom filters help reduce the number of read IOPS often making it close to B-Tree style workloads by trading off some CPU.

- For read-recent workloads (e.g., “Get top 50 messages of an inbox or recent 6 hours of activity for a device”), LSMs outperform B-Trees. This is due to the fact that in an LSM data is naturally time partitioned, while still being clustered by say the “user id” (in an Inbox-like application) or “device id” (as in a IOT or time series application).

Language Matters: C++, Java, or Go?

RocksDB is written in C++ with the intent of exploiting the full throughput and low-latency of fast SSDs & non-volatile memory (NVM). For similar reasons, YugabyteDB is implemented in C++.

We didn’t want to put an inter-language switch in the critical path of a database engine’s IO. For example, CockroachDB, another database that uses RocksDB as its storage engine, incurs a Go <=> C++ switch in the critical IO path because the query execution layer is in Go language and storage engine (RocksDB) is C++. The number of these language-boundary hops needed to process fairly simple queries can still be significant and this is one of the factors that adversely impacts performance.

We resisted the temptation to develop a high performance database in a garbage collected language such as Java (e.g., Apache Cassandra) or Go (e.g., CockroachDB). Such implementations are unable to push fast storage to its full potential or to leverage large amounts of RAM.

By virtue of YugabyteDB and RocksDB both being in C++, we are able to achieve an in-depth & low-overhead integration of RocksDB with the rest of the system. Such an integration allows us to pass data as efficiently as possible (often by reference) between the various components of the system.

Introducing DocDB

As noted previously, DocDB is YugabyteDB’s distributed document store. Irrespective of the API used, every row managed by YugabyteDB is internally stored in DocDB as a separate document. This enables YugabyteDB to have a pluggable API architecture where new APIs can be added on top of the common document data model. Each DocDB document is mapped to multiple key-value pairs in RocksDB, the underlying per-node storage engine.

Data Types

DocDB extends RocksDB to efficiently handle documents that include both primitive and non-primitive types (such as maps, collections, and JSON). The values in DocDB can be:

- Primitive types: such as int32, int64, double, text, timestamp, etc.

- Non-primitive types (stored as sorted maps): Objects that map scalar subkeys to values, which could themselves be either scalar or nested object types.

Mapping Documents to RocksDB Key-Values

The DocDB data model allows multiple levels of nesting, and corresponds to a JSON-like format. Other data structures like lists, maps and sorted sets are implemented using DocDB’s object type with special key encodings.

For example, consider the following document stored in DocDB.

user-123 = {

addr = {

city = San Francisco

zip = 94103

},

age = 36

}The above document is stored in RocksDB as a set of keys. Each key stored in RocksDB consists of a number of components, where the first component is a “document key”, followed by a few scalar components, and finally followed by a MVCC timestamp (sorted in reverse order). When we encode primitive values in keys, we use a binary-comparable encoding, so that the sort order in RocksDB ends up to be the desired one.

Assume that the sample document above was written at time T10 entirely. Internally this is stored using five RocksDB key-value pairs:

user-123, T10 -> {} // This is an init marker

user-123, addr, T10 -> {} // This is a sub-document init marker

user-123, addr, city, T10 -> San Francisco

user-123, addr, zip, T10 -> 94103

user-123, age, T10 -> 36DocDB uses RocksDB as an append-only store so that deletions of documents or nested documents are performed by writing a single tombstone marker at the corresponding level. Customized read hooks automatically recognize these special markers and suppress expired data. Expired values within the sub-document are cleaned up/garbage collected by our customized compaction hooks.

Serving Workloads with Low Latency & High Throughput

DocDB ensures that the database read/write operations can be executed at the lowest cost possible by using multiple techniques. The following are a few examples:

- Fine-grained updates to only a small subset of a row (such as a column in the YSQL API) or an element in a collection (such as in the YCQL API) without incurring a read-modify-write penalty of the entire row or collection.

- Delete/overwrite of a row or collection/object at an arbitrary nesting level without incurring a read penalty to determine the specific set of KVs that need to be deleted

- Efficient row/object-level TTL handling by tightly hooking into the “read/compaction” layers of the underlying RocksDB KV store.

Summary

RocksDB is arguably one of the most successful open source data infrastructure projects in the last decade. It is second to none when it comes to fast monolithic key-value storage. YugabyteDB leverages RocksDB’s strengths in the context of embedding it as the per-node storage engine of DocDB, its distributed document store. Every row managed by YugabyteDB is stored as a document in DocDB that internally maps to multiple key-value pairs in RocksDB. As described in our next post “Enhancing RocksDB for Speed & Scale”, we also had to make significant enhancements to RocksDB in this context. We hope our design choices and lessons learnt help guide engineering teams looking to leverage RocksDB beyond its design original intent.

Building a massively scalable, high performance SQL database is a hard engineering problem to say the least. Using RocksDB as a foundational building block certainly made life easier for us as an engineering team. Needless to say, we are thankful to the entire RocksDB community for their phenomenal work over the years.

What’s Next?

- Compare YugabyteDB in depth to databases like CockroachDB, Google Cloud Spanner, and MongoDB.

- Get started with YugabyteDB on macOS, Linux, Docker, and Kubernetes.

- Contact us to learn more about licensing, pricing, or to schedule a technical overview.